Machine Learning has come a long way since its inception. Today, machine learning enables businesses to connect with their target audience even better. Be it Netflix’s recommendation engine or using analytics to combat human trafficking – machine learning is finding a place in every verticle of our daily lives.

Every day, new machine learning trends are making headlines – putting businesses in a stiff race to catch up with the fast-paced development and growth of machine learning and AI.

In this article, we are going to discuss and learn machine learning technology, look at machine learning history, see what the future of AI and ML has in store, how and why we move from machine learning to deep learning, and the latest technologies to learn.

Also Read: Top Machine Learning Tools to Learn in 2024

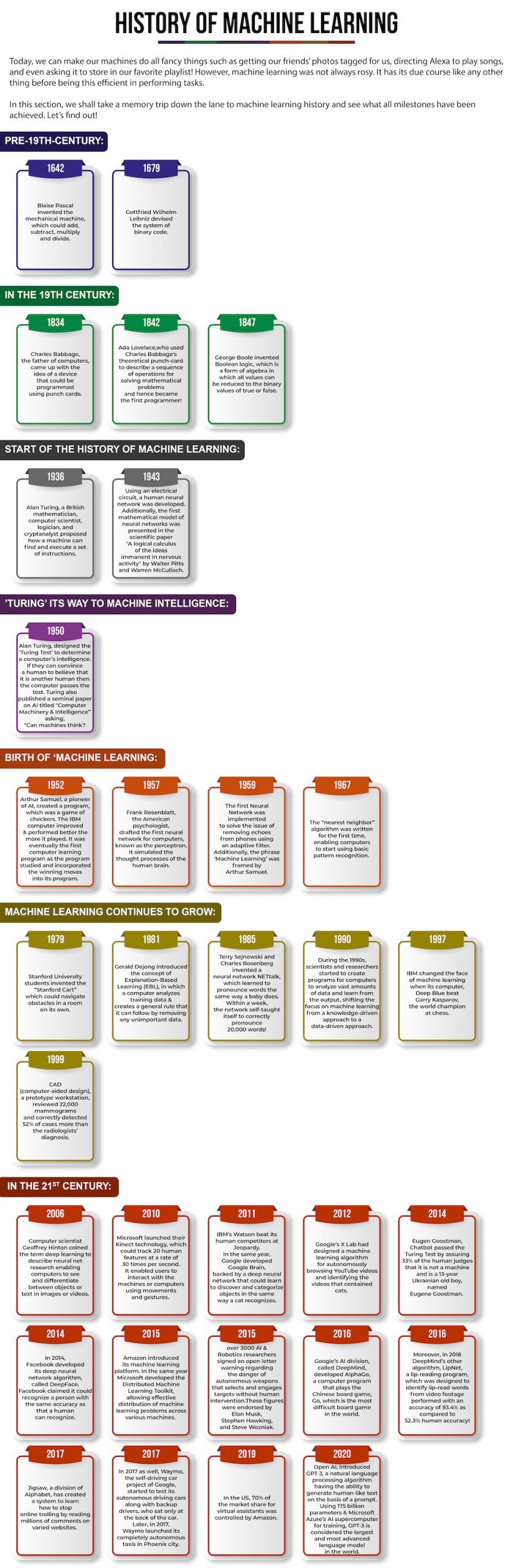

Evolution of Machine Learning

Machine learning is so intricately a part of our daily lives that we sometimes don’t even know that we are relying on it. For instance, ask Alexa to save a playlist or tag your pictures automatically in your phone. There are so many other things that machines are doing day in and out. With time, machine learning has evolved to mimic human understanding.

Machine learning dates back to the pre-19th century.

- In 1642, Blaise Pascal invented the mechanical machine to add, subtract, multiply, and divide.

- In 1679, Gottfried Wilhelm Leibniz devised the system of binary code.

This is where it all started. Machine learning kept growing in leaps and bounds throughout the 19th, 20th, and 21st centuries.

Machine Learning Trends To Follow in 2024

With new innovations and technologies getting upgraded daily, Machine Learning has become a core part of development. According to the advancements in ML recently, here are some trends to follow in 2024 –

No-Code Machine Learning

No-code machine learning programs applications without the lengthy process of preprocessing, creating models, training, and deploying the models. It enables users to build their tools via a drag-and-drop interface instead of complicated coding.

Example: Amazon SageMaker

Low-code and no-code technologies are emerging trends in machine learning, offering speed, flexibility, and saving time and cost. Platforms leveraging this new ML technology are DataRobot, Clarifai, and Teachable Machines, empowering them to operate without the need for an engineer or developer.

Tiny ML

IoT dominates the technology world. However, the large-scale ml use cases have limited use. As the saying goes, “Good things come in small packages”, TinyML also offers powerful solutions for smaller-scale applications.

A web request takes a long time to traverse and deliver data to a large server. To reduce latency, bandwidth, and power consumption and ensure user privacy, TinyML is applied on IoT edge devices for tracking and predicting the collected data.

Automated machine learning

Traditionally, machine learning tasks of labeling the data were conducted manually. This was not only time-consuming but also prone to human error. Automating data labeling reduces the chances of human error.

AutoML focuses on making the machine learning building process simple and accessible for developers without requiring much machine learning expertise. Every phase of machine learning and deep learning workflow is automated, from data reprocessing, defining data features, and modeling to building neural network data.

Automation reduces expenses enabling businesses to afford the AutoML analytical tools and technologies.

Full-stack Deep Learning

Let’s say your team of engineers skilled in deep learning has developed a functional deep learning model. However, post the creation of the deep learning model, some of the files need to be connected with the outer world where the users are based. So, then as to bridge, the engineers are next required to include deep learning models into infrastructure:

- A cloud-based backend for mobile applications

- Edge gadgets or devices like Raspberry Pi and NVIDIA Jetson Nano exist.

The requirement to include deep learning solutions in the products led to the development of libraries and frameworks. They will facilitate engineers in quickly adapting to new business requirements by automating the varied shipment tasks and education courses.

Few Shot, One Shot, & Zero-Shot Learning

Few shot learning needs only limited data and applies to cases including image classification, facial recognition, and text classification. One-shot learning uses even less data than few-shot; it does not require an extensive database and only needs the present data, so it is best suited for facial recognition and not for complex problems.

Whereas, Zero-shot machine learning systems look at a subject and utilize the data to forecast which category it is classified into.

Metaverses

Metaverses envisioned as part of the evolution of the internet in the Web 3.0 era are poised to become a significant phenomenon. These digital realms resemble alternate universes where individuals can engage in various activities, conduct business, generate income, and establish virtual lives.

The Covid-19 pandemic has sparked a notable surge in demand for metaverses, and this trend is expected to persist, potentially driving a new trajectory for AI in 2024. Machine learning and AI technologies will play a crucial role in developing these platforms by streamlining processes and enhancing user experiences.

AI bots, for example, will assist users in selecting services, while machine learning will contribute to creating immersive environments within these metaverses.

Machine Learning Optimization Management (MLOps)

A number of distinct problems, including ML pipeline design, scalability, teamwork, and the management of sensitive data at scale, have historically accompanied machine learning’s advancement.

All of this, however, occurred before the advent of the key trend in machine learning. The administration of machine learning optimization is a current trend. By developing the best practices for deploying ML apps, MLOps seeks to address each of these problems.

Due to its business objective-first design, MLOps’ phases may resemble conventional machine learning development. However, MLOps offers a great deal more openness, fluidity in communication, and greater scaling.

Natural Speech Understanding Process Automation

Much information about smart home technology that functions with smart speakers is floating around. One of the most popular trends for machine learning apps will be the automation of comprehending natural speech.

Due to the existence of very sophisticated voice assistants like Siri, Google, and Alexa, this process is also made simpler. These voice assistants may also connect to smart appliances without any contact control. These computers have already mastered a great degree of accuracy when it comes to hearing human sounds.

AnalytixLabs offers tailor-made courses in machine learning in data science. You can opt for the Python Machine Learning Course You can also enroll in our Data Science 360 certification course and our exclusive PG in Data Analytics course at your convenience or book a demo with us.

Machine Learning Technologies

Following are the various machine learning technologies that are extensively used for machine learning and deep learning projects:

Keras

Designed by Google engineer François Chollet, Keras is an open-source deep-learning framework. The primary focus of Keras is to simplify the procedure of creating deep learning models and to offer fast experimentation with deep neural networks.

Keras is extremely handy and easy to use for newbies in deep learning; it is considered the best framework for beginners to start with and is referred to as user-friendly. It is syntactic and has easy read and apply syntax. It is also familiar for its simplicity, modularity, fast prototyping, and ease of extensibility.

In the backend, Keras supports TensorFlow, also used for model deployment. It runs optimally on both CPUs (central processing units) and GPUs (graphics processing units) and has the same Python code for both processing units.

Keras can be Written in Python and deployed on other technologies, including TensorFlow, Theano, Microsoft Cognitive Toolkit, R, and PlaidML. It is used by major organizations such as CERN, Yelp, Google, Netflix, and Uber.

TensorFlow

Released in 2015, Google designed TensorFlow to support its research and production goals. This open-source software is available in various languages, including Python, C++, Haskell, Java, Go, Rust, and JavaScript. TensorFlow is a symbolic mathematics library enabling the development of neural networks.

TensorFlow, a well-maintained and immensely used framework for machine learning, is known for documentation and training support. It is suitable for dataflow programming across numerous tasks. It enables developers to build and deploy machine learning-based applications easily.

This comprehensive and flexible solution is easy to use. It can have scalable production and deployment options, provides various abstraction levels to build and train models, and supports other platforms like Android. Prominent companies widely use it across industries and domains, like Intel, Twitter, Dropbox, eBay, and Uber.

PyTorch

Torch, the oldest ML technology of all, was released in 2002. It offers a plethora of deep learning algorithms. Written using the scripting language LUA, Torch has an underlying implementation in C.

The primary characteristics of Torch consist of N-dimensional arrays, linear algebra routines, and numeric optimization tasks. It offers effective GPU support and supports platforms, including iOS and Android.

The tech giants, including Google, Facebook, Google, Twitter, and NVIDIA, use Torch’s deep learning framework.

Based on Torch, PyTorch was developed by Facebook’s AI Research Lab (FINE) in 2017. This open-source library in Python is mainly used for computer vision and NLP use cases. It has modules such as torchtect, torchvision, and torchaudio to conduct CV and NLP tasks.

PyTorch is beginner-friendly, easy for beginners to start coding, and has a Pythonic way of executing deep learning models. It is known for its simplicity, flexibility, and speed. It is easy to apply without facing unnecessary complexities in the process. Additionally, it has effective memory usage and dynamic computational graphs.

Theano

Theano, originally released in 2017, is an open-source Python library allowing us to create numerous machine-learning models. It is considered a benchmark for the industry since it is one of the oldest libraries.

It enables users to define, evaluate, optimize, and assess mathematical expressions, including multi-dimensional arrays and matrix-valued expressions.

Theano has inspired development for deep learning and is curated to train deep neural network algorithms. It can run on both CPU and GPU. It also can handle and transform structures into efficient code integrating with libraries such as NumPy and BLAS, and has code-testing capabilities as well.

Additionally, it offers symbolic differentiation, i.e., it can find the derivative of a given formula concerning a specified variable resulting in a new formula.

Caffe

Developed by Yangqing Jia, Convolutional Architecture for Fast Feature Embedding, or Caffe, is an open-source deep learning framework. Written in C++, Caffe includes a Python interface and can perform computations on both CPU and GPU. Researchers, academics, and industries extensively use it.

Caffe is known for its speed, expressiveness, and modularity. It is largely used for computer vision applications rather than other use cases. The library daily can process sixty million images using only a single NVIDIA K40 GPU!

Its main use case is Convolutional Neural Network. Facebook, in 2017, expanded the framework by including advanced deep learning architecture, such as Recurrent Neural Networks, in the repertoire of Caffe.

Microsoft Cognitive Toolkit (CNTK)

In 2016, Microsoft released its open-source deep learning framework called Cognitive Microsoft Toolkit, which was earlier known as CNTK. Microsoft claims its framework is competent in “training deep learning algorithms to function like the human brain.” It’s also said to be the fastest among its counterparts and can take machine learning projects to the next level.

The key attribute of the toolkit is that it can handle data from Python, C++, or BrainScript. It is easily integrable with Microsoft Azure, can internally operate with NumPy, and offer resource usage. Products such as Skype, Cortana, Bing, and Xbox widely use the Microsoft Cognitive Toolkit.

Scikit-learn

Scikit-learn (Sklearn), first released in 2007, is the most comprehensive library for ML in Python. It is a complete package to build and evaluate any machine learning algorithm. This open-source library is built upon other ML technologies, namely NumPy, SciPy, and Matplotlib.

It is a power-packed library for all machine learning solutions, including data preprocessing, data transformation, statistical modeling including regression, classification, clustering, dimensionality reduction, testing and validating data using cross-validation, and metrics to evaluate the models.

For importing data, manipulating, and summarizing the data, Pandas and NumPy libraries are used, and for visualizing data, Matplotlib and Seaborn are hugely used and popular machine learning solutions.

Related reading materials:

Accord.NET

Accord.NET is an open-source machine learning framework written in C#. Released in 2010, this ml technology combines audio and image processing libraries. It is an end-to-end tool for creating production-grade scientific computing, computer audition, signal processing, and statistics.

It is suitable to create applications in artificial neural networks, image processing, statistical data processing, and computer vision using its wide range of libraries.

Conclusion

We saw in-depth what are machine learning trends, innovative machine learning projects, and the future of machine learning. Deep learning is an amalgamation of neural networks that can learn and identify complex patterns present in a vast amount of data and possess extensive computing power making it a powerful start in the art technology.

FAQs

- What is the new technology in machine learning?

The new machine learning technologies are the frameworks established to carry out data mining and analysis activities. These technologies are libraries and packages of Python, namely Pandas, Numpy, Scipy, Matplotlib, Seaborn, Sk-learn, Keras, Tensorflow, PyTorch, and NLTK.

These tools encompass each task needed for data science, including ingestion of data, data munging, visualization of data, data preprocessing, data transformation, feature selection, model building, training the model, evaluation of the model, and fine-tuning the model.

- What are the emerging trends in AI?

The emerging trends in machine learning are:

- No-Code Machine Learning

- TinyML

- AutoML

- Machine Learning Operationalization Management

- Full-stack Deep Learning

- Generative Adversarial Networks

- Unsupervised ML

- Reinforcement Learning

- Few Shot, One Shot, & Zero Shot Machine Learning

- Why are machine learning trends emerging so fast?

Machine learning trends have been growing so fast due to the fact that they can repeatedly, fastly, and automatically apply complex mathematical solutions to big data.