In today’s increasingly data-driven world, extracting valuable information is a fundamental skill for IT professionals. As a critical concept, data extraction has become an essential part of various industries, including data engineering, software development, and business analytics.

Data extraction is crucial across various industries, enabling organizations to unlock insights and make informed decisions. It offers scalability, efficiency, and data integration into analysis and reporting systems.

In this article, we will discuss data extraction. We will examine data extraction definition, significance, and data extraction methods. We will explore different types of data extraction techniques, tools, and applications and their exciting future.

What is Data Extraction?

It refers to collecting, retrieving, or importing data from various structured or unstructured sources. It captures data from various sources, such as databases, websites, documents, spreadsheets, etc.

You can convert it into a usable format for further analysis, processing, or storage. It is a foundation for gaining valuable insights and making informed decisions in the data management cycle.

There are different methods and techniques for data extraction. Manual data entry involves the copying and feeding of data by humans. It is time-consuming and error-prone but necessary in certain cases.

Automation plays a significant role, with scripting and programming using languages like Python, Java, or R to automate the data processing. Custom scripts enable interaction with data sources, retrieving relevant information and transforming it into a structured format suitable for analysis.

Web scraping is another widely employed technique, using automated tools or bots to navigate websites, extract specific data based on predefined rules, and save it in a structured format. The method is particularly useful for market research, competitive analysis, and data aggregation tasks.

Database queries leverage SQL (Structured Query Language) to retrieve specific data from databases based on predefined conditions. Developers write queries to gather the required information and store it in separate files or database tables.

Application Programming Interfaces (APIs) provide a structured approach for accessing and extracting data from software applications. APIs define rules and protocols, enabling developers to retrieve specific information programmatically and seamlessly integrate it into their systems.

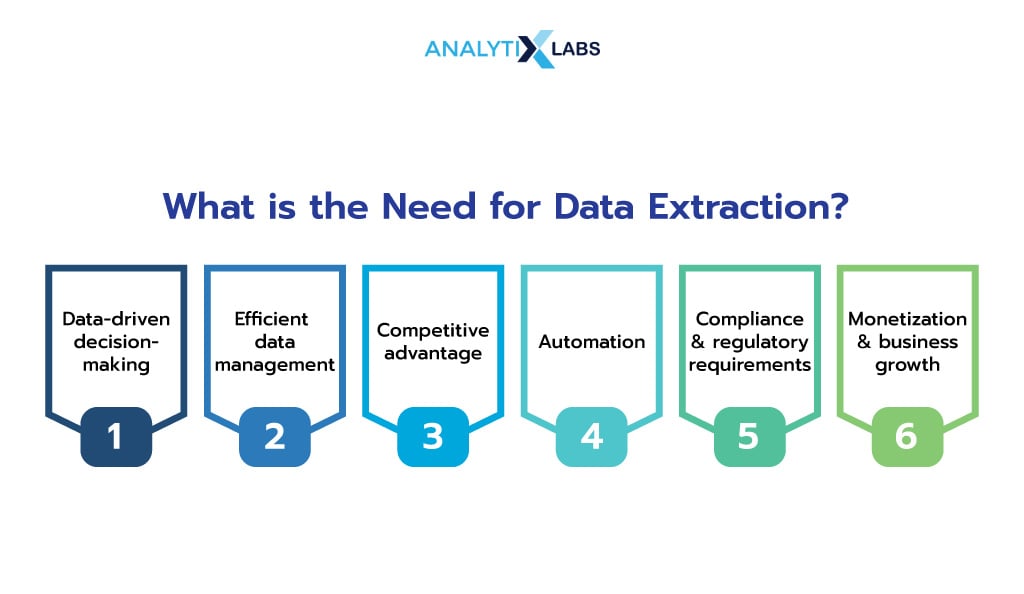

Why do you need data extraction?

Data extraction has a crucial role in the era of data-driven decision-making and the ever-increasing volume and diversity of data sources. There are several reasons why data extraction has become an indispensable part of every industry, such as:

- Data-driven decision-making: Businesses that base their decisions on accurate, real-time data have a higher chance of success. Data extraction provides organizations with up-to-date information that they can use to make informed decisions.

- Efficient data management: Data extraction enables businesses to collect and consolidate data from various sources in a unified, structured format. It leads to better organization and management of data, facilitating a smoother workflow.

- Competitive advantage: A strong data extraction strategy can help an organization identify areas for improvement, analyze its operations and performance, and implement necessary changes. The insight gives a competitive edge over others in the market.

- Automation: Data extraction tools and techniques allow businesses to automate the time-consuming and labor-intensive process of collecting and organizing data. This automation results in reduced human error and increased efficiency.

- Compliance and regulatory requirements: Organizations operate in a regulatory environment that demands proper data management. Data extraction ensures accurate and auditable records, helping organizations meet compliance and regulatory requirements, like GDPR, HIPAA, or PCI-DSS.

- Monetization and business growth: Extracted data can be leveraged for monetization purposes, such as offering data-driven services, creating new revenue streams, or providing valuable insights to external partners or customers.

Explore our signature data science courses and join us for experiential learning that will transform your career.

- Data Science 360 Certification Course

- PG in Data Science

- Business Analytics 360 certification course

- PG in Data Analytics

You can opt for classroom, online, or self-paced learning for a convenient and smooth learning experience. We help you decide which course is ideal for you, guide you throughout your learning journey, and ensure that you get on board with your dream company after you complete our course.

Book a demo with us, and let us help you make the right choice.

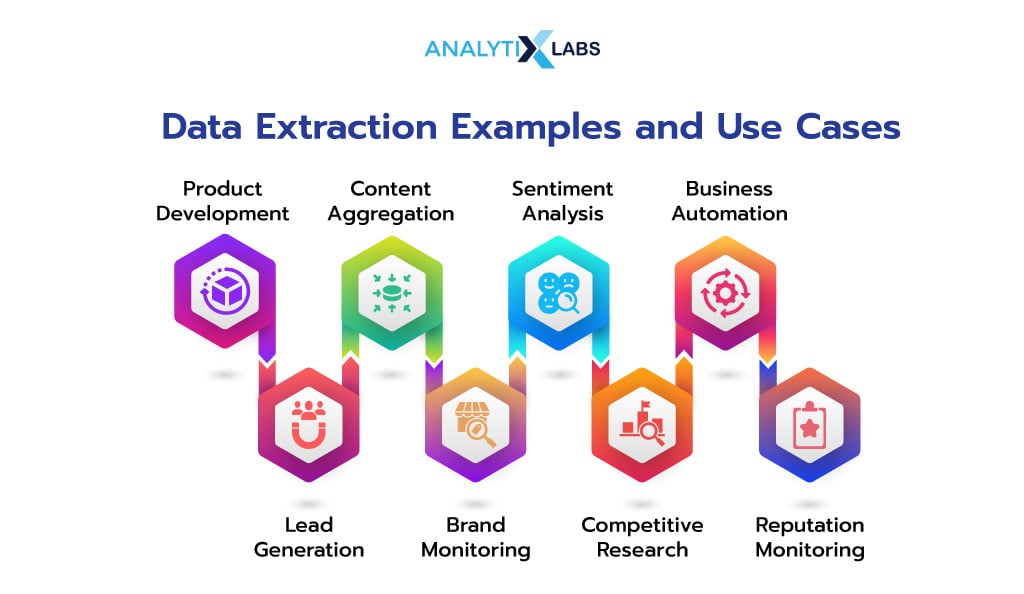

Data Extraction Examples and Use Cases

Data extraction is used in various scenarios, including business intelligence, automation, and content aggregation. Here are some examples of its use cases:

-

Product Development

Collecting product specifications from various sources, such as manufacturer websites, competitor websites, and product reviews, is a use case of data extraction. It allows businesses to anticipate customer needs better and develop more effective products. For example, a product development team could use web scraping tools to scrape manufacturer websites for product details, including features and prices.w

-

Lead Generation

Data extraction plays a vital role in lead generation by collecting relevant contact information from various sources. Businesses use this data to reach prospective customers and nurture relationships with existing customers.

For instance, a marketing agency can extract data from online directories, social media platforms, or industry-specific websites to identify potential leads and their contact details. The extracted data can be used for targeted marketing campaigns, lead nurturing, and sales outreach.

-

Content Aggregation

Collecting content from multiple sources and displaying it in one place is an important use case of data extraction. It lets businesses quickly generate comprehensive reports on market trends or customer preferences.

For example, an analytics team could use web scraping tools to scrape news sites for articles related to their target audience and then aggregate them into one report for better insights.

-

Brand Monitoring

Data extraction is also used for brand monitoring, which involves collecting online conversations about a brand and its products. It allows businesses to track customer sentiment and identify potential issues before they become widespread.

For example, an analytics team could use web scraping tools to scrape social media sites for posts about their brand and then analyze the data for feedback or complaints.

-

Sentiment Analysis

Critical sentiment analysis involves extracting and analyzing data to understand the sentiment expressed toward a brand, product, or topic.

For instance, a company can pull data from social media posts, customer surveys, and customer service interactions to determine customer satisfaction levels, identify common issues, and make data-driven improvements to enhance customer experience.

Also read: How To Perform Twitter Sentiment Analysis – Tools And Techniques

-

Competitive Research

Data extraction plays a crucial role in competitive research by gathering data about competitors’ strategies, pricing, and customer sentiment.

For example, a retail company can gather data from competitor’s websites, customer reviews, and social media to analyze product offerings, pricing trends, and customer feedback. This information helps businesses stay competitive, identify market gaps, and make informed decisions.

-

Business Automation

Data extraction enables businesses to automate repetitive tasks and streamline operations. For example, a financial institution can pull data from invoices, receipts, and financial statements to automate data entry processes, decrease errors, and improve efficiency. This automation saves time and resources, allowing employees to focus on higher-value tasks.

-

Reputation Monitoring

Monitoring online reputation becomes easier with data pulling. You can extract data from review websites, news articles, and social media platforms. This data helps businesses track and analyze customer feedback, identify potential reputation risks, and take proactive measures to protect their brand image. For example, a hotel chain can extract data from review websites to monitor guest satisfaction levels and address any negative feedback promptly.

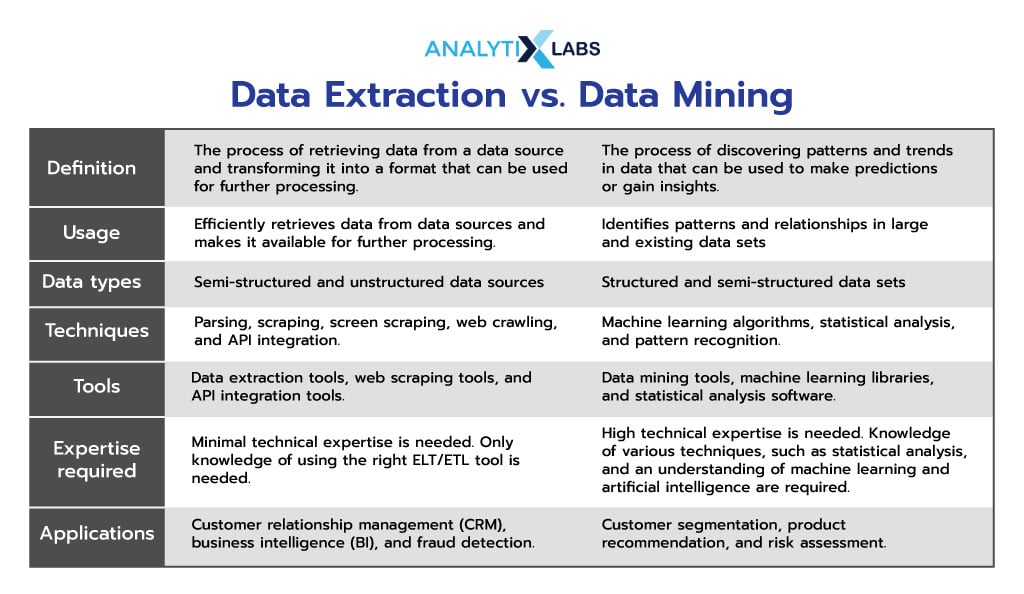

Data Extraction vs Data Mining

Data extraction and Data mining are two essential processes in data analysis, each serving a specific purpose and contributing to the overall data-driven decision-making process.

In terms of purpose,

- Data extraction focuses on data pulled from various sources and processing it for further analysis. Its goal is to gather relevant data and transform it into a usable format.

- Data mining, on the other hand, aims to analyze the prepared data to uncover patterns, relationships, and insights. It focuses on extracting valuable information and knowledge from the data.

Data extraction employs techniques such as web scraping, API integration, or database queries to retrieve data from various sources. In comparison, data mining utilizes statistical techniques, machine learning algorithms, and data visualization methods to analyze and interpret the data.

The outcome of data extraction is a well-prepared dataset ready for analysis. It ensures the collected data is accurate, complete, and appropriately formatted. In contrast, the outcome of data mining is the extraction of valuable insights, patterns, or relationships from the analyzed data. It provides actionable knowledge that can be used for decision-making and problem-solving.

Also read:

To sum it up,

Data Extraction and ETL

Data extraction and ETL, or Extract, Transform, and Load, are critical to data-driven decision-making. These processes play an important role in ensuring accurate and accessible data for analysis. They enable effective data management and utilization in the IT industry.

Data extraction involves retrieving relevant and up-to-date information from diverse sources such as databases, APIs, files, or web scraping. The subsequent transformation phase focuses on cleaning, filtering, validating, and structuring the data to meet predefined standards. It includes converting data types, aggregating information, and performing data cleansing operations.

Once the data has been transformed, it is loaded into designated destinations such as data warehouses or operational databases. The optimization of storage and the facilitation of efficient analysis is achieved by leveraging automation and scalability.

Specialized ETL tools like Apache Spark or Informatica PowerCenter enhance productivity, particularly in large-scale projects.

These processes ensure data consistency, accuracy, and reliability, leading to valuable insights and informed decision-making. Developing a proficient understanding of data extraction and ETL empowers professionals to effectively leverage data for driving innovation and making informed choices in the digital era we live in today.

Understanding the Data Extraction Process

Data extraction is an essential step in data management and analysis. It involves data pulling from various sources such as databases, APIs, files, and web scraping. This process plays a pivotal role in acquiring the necessary information for analysis, requiring specialized techniques and tools for accurate and efficient data extraction.

IT professionals must be proficient in SQL, programming languages, and data querying methods to navigate different data sources and extract relevant datasets.

During data extraction, ensuring data quality and integrity is crucial. Verifying that the extracted data is accurate, up-to-date, and complete is imperative. Data profiling techniques and validation mechanisms are employed to identify and rectify any anomalies or inconsistencies.

Additionally, data extraction often involves handling different data types, including structured, semi-structured, and unstructured data. This necessitates expertise in handling various file formats such as CSV, JSON, XML, or unstructured text documents. Data engineers and developers should be adept in data parsing techniques to extract meaningful information from these diverse sources.

Moreover, data extraction may encompass data transformation operations. These operations involve cleansing the data to eliminate duplicates or irrelevant entries, converting data types, aggregating data from multiple sources, and standardizing the data format for consistency.

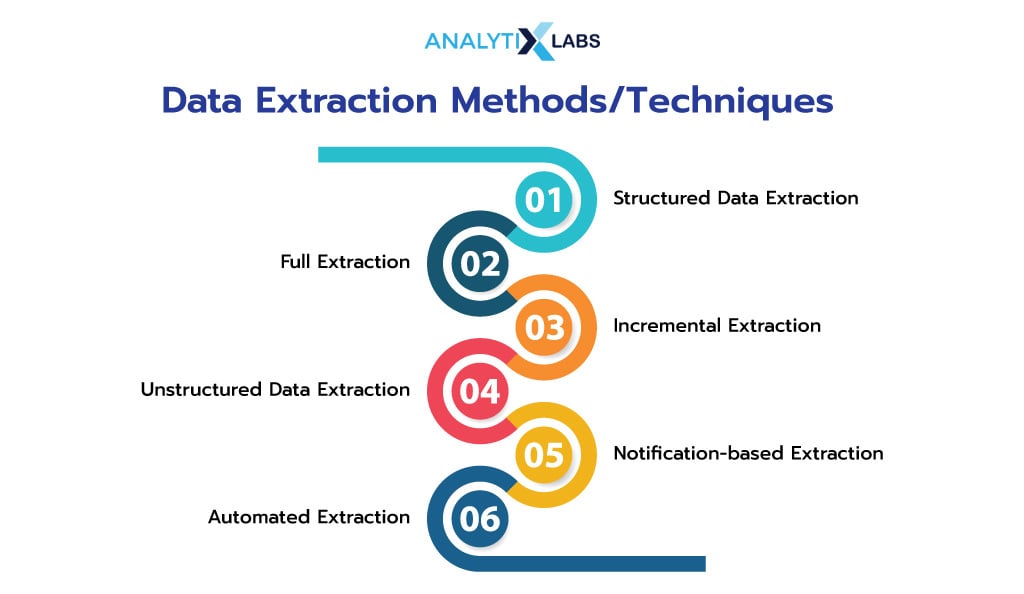

Data Extraction Methods/Techniques

One of the most common methods is manual extraction, where users enter the specific data they need to extract from an online source or document. This method requires expertise in finding the required information from the available sources. While manual extraction offers accuracy and control over the extracted data, it is time-consuming and prone to human errors.

Other data extraction methods that assist in extracting large volumes of data are:

-

Structured Data Extraction

The method of extracting information from structured sources, such as databases and spreadsheets, is known as Structured data extraction (SDE). It involves writing queries or code to specify the exact fields that should be extracted from the available source.

While this offers accuracy and control over the extracted data, it requires technical skills to execute. It requires SQL (Structured Query Language) proficiency to query and retrieve specific data based on predefined criteria.

Also read:

-

Full Extraction

In the full extraction approach, all the data from a particular source is extracted in one go. It is suitable for smaller datasets or cases where data updates are infrequent. Full extraction is simple and ensures comprehensive data coverage but can be time-consuming and resource-intensive for larger datasets.

-

Incremental Extraction

The process in which new data entries are added to an existing dataset after every iteration or cycle is called Incremental extraction. A typical example would be web scraping, where each page visit adds new records to an already populated database.

Incremental extraction offers a more efficient approach than manual or structured extraction when dealing with large datasets, as it eliminates the need to re-extract all the data on every update. Timestamps or unique identifiers are commonly used to track changes during incremental extraction.

-

Unstructured Data Extraction

The technique used to extract data from unstructured sources, such as documents, web pages, emails, and other text-based formats, is known as Unstructured Data Extraction (UDE). This approach involves using software tools or programs that can detect and recognize patterns in the source document to identify key information for extraction.

UDE methods are suitable for extracting large amounts of data quickly but have the drawback of producing less accurate results than manual or structured extraction. Techniques like natural language processing (NLP) and text mining extract valuable insights from unstructured data sources.

-

Notification-based Extraction

Notification-based extraction is an automated process where changes in external sources are monitored regularly, and notifications are sent when any new information is available. For instance, notifications are sent to prompt the extraction process whenever new records are added, or data is updated.

It can be used for incremental updates, which require frequent checks on external sources for any changes. Examples of notification-based extraction include email alerts and RSS feeds. This approach ensures real-time or near-real-time data availability.

-

Automated Extraction

Using software tools or programs to extract data from external sources automatically is known as automated extraction. The method involves using scripts, APIs, web crawlers, and other automated processes to query for information without manual intervention. Automation helps speed up the process of extracting large amounts of data quickly and accurately while reducing the chances of human error.

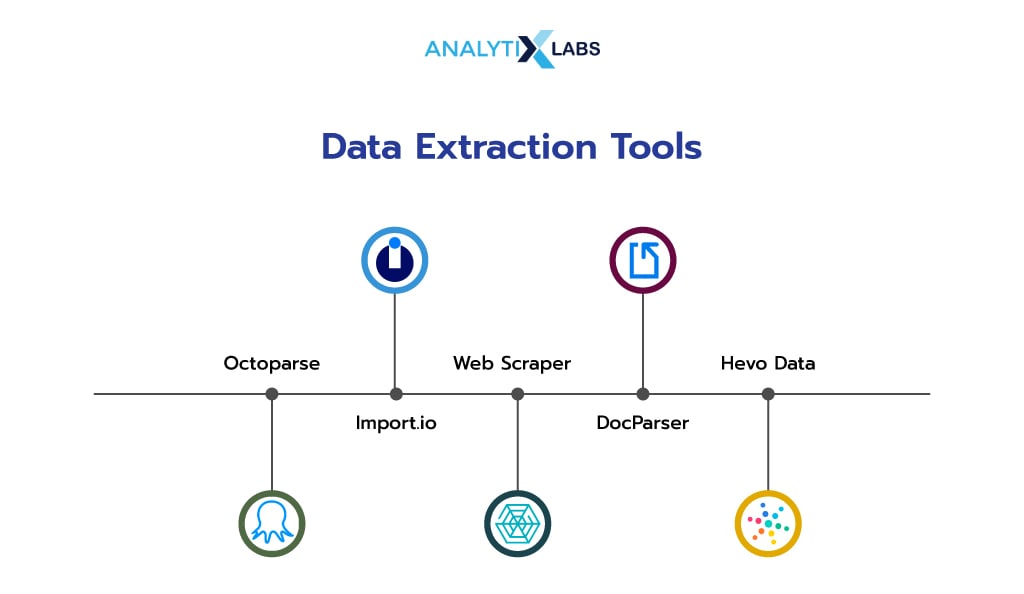

Data Extraction Tools to Learn

-

Octoparse

Octoparse is a user-friendly web scraping tool that enables users to extract data from websites without coding. It provides a visual interface allowing users to navigate web pages, select data elements, and set up extraction rules easily.

Octoparse supports various data formats and offers features like automatic IP rotation, schedule-based extraction, and cloud-based data storage. It is popular for beginners and experienced users due to its flexibility and scalability in handling complex data extraction tasks.

-

Import.io

Import.io is a comprehensive data extraction tool. It is a platform that offers both web scraping and data integration capabilities. It enables users to extract data from websites and APIs with minimal effort.

Import.io provides a point-and-click interface, making it accessible to non-technical users. It also offers advanced features like data transformation, integration with third-party tools, and data export in various formats. With its AI-powered extraction engine, Import.io can handle complex web scraping tasks and deliver accurate, structured data.

-

Web Scraper

Web Scraper is a browser extension tool for popular web browsers like Chrome and Firefox. It enables users to extract data from websites by creating custom scraping rules. Users can select data elements on web pages using CSS selectors and define extraction logic using a visual interface.

Web Scraper supports pagination, form filling, and scheduling options. It is a simple yet effective tool for small-scale web scraping projects, making it suitable for beginners and users with basic coding knowledge.

-

DocParser

DocParser is a specialized data extraction tool that extracts information from documents, such as PDFs, invoices, receipts, and contracts. It automatically uses machine learning algorithms to automatically identify and extract relevant data fields from unstructured documents.

DocParser offers features like custom parsing rules, data validation, and integration with other applications via API. It is widely used in industries like finance, accounting, and logistics, where extracting data from documents is a common requirement.

-

Hevo Data

Hevo Data is a cloud-based data integration tool providing data extraction capabilities. It lets users pull data from various sources, including databases, applications, and streaming platforms. Hevo Data offers pre-built connectors for popular data sources and supports real-time data extraction.

It gives a visual interface for setting up data extraction workflows and offers data transformation and enrichment options. With its ability to handle large volumes of data and provide seamless integration with data warehouses and analytics tools, Hevo Data is suitable for enterprises with complex data integration needs.

Data Extraction and Business Intelligence

Business Intelligence (BI) involves data collection, analysis, and visualization to help businesses make better decisions. Data extraction is crucial in BI, providing the raw material processed and analyzed to generate actionable insights.

BI systems rely on data extraction techniques to gather data from various sources, organize it into a structured format, and integrate it with other data sets. This data is then processed through various analytical methods, such as reporting, data mining, and machine learning, to generate valuable business insights.

Future of Data Extraction: Whats in Store?

As the world becomes more connected and generates copious amounts of data every day, the importance of data extraction will only grow. Here are some trends driving the future of data extraction:

- Machine Learning and Artificial Intelligence: ML and AI technologies are being used to improve the quality and efficiency of data extraction algorithms. They can help identify patterns and trends, allowing for more accurate and effective data extraction.

- Big Data: With the rise of Big Data, there is a growing need to extract valuable information from these large, complex data sets. Data extraction tools that can handle Big Data are becoming increasingly vital in this context.

- Real-time data extraction: Businesses are demanding more real-time data extraction capabilities. It will require technologies that can quickly and accurately pull data from large streaming datasets.

- Cloud Computing: It is becoming an increasingly ubiquitous and efficient way to store and access data. Solutions for cloud-based data extraction are becoming necessary for businesses to stay competitive.

Also read :

Overall, the future of data extraction looks bright as these new technologies continue to evolve and provide new ways to process and analyze information. Data extraction tools will become smarter, faster, and more powerful in the coming years. It will allow businesses to leverage their massive datasets more effectively.

Understanding what data extraction is and how it works now will be essential for professionals looking to capitalize on the career opportunities presented by the exciting field of data extraction in the future.

Frequently Asked Questions (FAQs)

-

What is an example of data extraction?

An example of data extraction is extracting customer information from a relational database for analysis and decision-making.

Using SQL or ETL tools, a company can extract customer demographics, purchase history, and interaction data from its CRM database. Analyzing this data offers insights into customer behavior, preferences, and trends, empowering organizations to tailor marketing strategies, boost customer satisfaction, and fuel business growth.

-

What is data extraction in SQL?

Data extraction in SQL refers to retrieving specific data from a relational database using SQL queries. IT professionals, data engineers, and developers can extract relevant information from structured databases based on specific criteria or conditions.

By utilizing SQL commands like SELECT, FROM, WHERE, and JOIN, professionals can retrieve data from tables, filter results, aggregate data, and perform complex operations.

SQL’s flexibility and robust querying make it a popular language for extracting data from relational databases, forming a strong base for subsequent data analysis and decision-making.

-

What is a data extraction tool?

A data extraction tool is a specialized software designed to streamline the process of extracting data from various sources, converting it into a usable format, and loading it into a desired destination.

These tools streamline data extraction, saving time with user-friendly interfaces, pre-built connectors, and transformation features. Apache Nifi, a notable open-source tool, exemplifies this by supporting extraction from various sources like databases, APIs, and file systems.

Data extraction tools enhance efficiency and accuracy, enabling professionals to extract data from diverse sources and effectively support integration and analysis initiatives.

![Tree Traversal in Data Structure Using Python [with Codes] tree traversal in data structure](https://www.analytixlabs.co.in/blog/wp-content/uploads/2025/01/Cover-Image-1-370x245.jpg)

1 Comment

Fantastic article….Thanks for sharing such an amazing content with us. Good job. Keep it up.